Problem Statement

The integration of artificial intelligence (AI) in healthcare has the potential to revolutionize medical services, offering improved diagnostics, personalized treatment plans, and enhanced efficiency in healthcare delivery. However, this rapid adoption of AI also brings forth significant ethical challenges that must be addressed to ensure the quality, fairness, and human-centric nature of healthcare services.

One of the primary concerns is the potential for bias in AI algorithms. AI systems are trained on vast datasets, and if these datasets are not diverse and representative, the resulting algorithms may perpetuate existing biases in healthcare. This can lead to unequal treatment recommendations, misdiagnoses, and disparities in healthcare outcomes for different demographic groups, particularly marginalized communities. Addressing this bias requires a proactive approach to data collection, algorithm development, and continuous monitoring to ensure that AI systems are fair and equitable.

Another critical challenge is the risk of dehumanizing patient care. While AI can assist healthcare providers in making decisions, there is a concern that over-reliance on AI-driven tools could diminish the importance of the human touch in medicine. Healthcare is not just about diagnosing and treating diseases; it is also about providing compassionate care, understanding patient needs, and building trust. If AI tools are used without careful consideration of these human elements, there is a risk that patient care could become overly mechanical and impersonal.

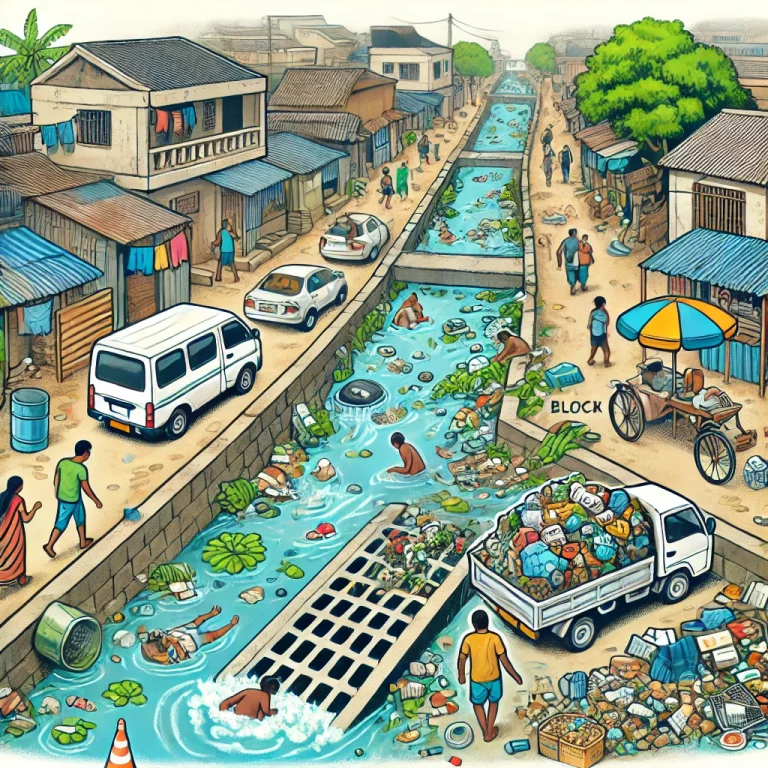

Finally, there is the issue of unequal access to AI-driven healthcare tools. AI technologies are often expensive to develop and deploy, and there is a risk that these advanced tools may be accessible only to wealthier healthcare systems and patients. This could exacerbate existing healthcare disparities, leaving underserved communities without access to the latest advancements in medical technology. Ensuring that AI-driven healthcare tools are accessible to all, regardless of socioeconomic status, is essential to maintaining fairness and equity in healthcare.

Addressing these ethical challenges requires a multifaceted approach that includes the development of inclusive AI algorithms, the preservation of human-centric care, and the promotion of equitable access to AI technologies.

Pain Points

- Bias in AI Algorithms: Risk of perpetuating existing biases in healthcare due to non-representative training data.

- Dehumanizing Patient Care: Over-reliance on AI tools could reduce the emphasis on compassionate, human-centered care.

- Unequal Access: High costs of AI technologies may limit access to advanced healthcare tools for underserved communities.

- Transparency and Accountability: Difficulty in understanding and explaining AI-driven decisions in healthcare.

- Trust in AI: Patients and providers may be hesitant to trust AI tools, particularly if they are perceived as biased or impersonal.

- Data Privacy: Concerns about the security and privacy of patient data used in AI systems.

- Ethical Governance: Lack of clear ethical guidelines and frameworks for the use of AI in healthcare.

- Regulatory Challenges: Navigating complex regulations for AI in healthcare, which may vary by region.

- Algorithmic Accountability: Difficulty in holding AI systems accountable for errors or unintended consequences.

- Impact on Healthcare Workforce: Potential for AI to disrupt traditional roles in healthcare, leading to workforce displacement.

Future Vision

Our platform envisions a future where AI is seamlessly integrated into healthcare in a way that enhances the quality, fairness, and humanity of medical services. By developing inclusive and transparent AI algorithms, the platform will ensure that AI-driven healthcare tools provide equitable and unbiased care to all patients, regardless of their background or demographics. The platform will promote the use of diverse and representative datasets in the training of AI models, with continuous monitoring and evaluation to detect and correct any biases that may arise.

To preserve the human element in healthcare, the platform will advocate for a balanced approach to AI integration, where AI is used to complement, rather than replace, the compassionate care provided by healthcare professionals. This includes developing AI tools that enhance, rather than detract from, the patient-provider relationship, ensuring that AI-driven decisions are used to support clinical judgment rather than dictate it.

The platform will also work to democratize access to AI-driven healthcare tools, ensuring that these technologies are available to all healthcare systems, regardless of their size or resources. By partnering with governments, NGOs, and private sector organizations, the platform will help to develop and distribute AI tools that are affordable and accessible to underserved communities, reducing healthcare disparities and promoting health equity.

Finally, the platform will provide ethical guidelines, educational resources, and regulatory support to healthcare providers and technology companies, helping them navigate the complex ethical landscape of AI in healthcare. By fostering collaboration between stakeholders, the platform will ensure that AI is used responsibly and ethically, with a focus on improving patient outcomes and maintaining trust in healthcare systems.

Use Cases

- Bias Detection and Mitigation: AI tools for identifying and correcting biases in healthcare algorithms.

- Human-Centric AI Integration: AI solutions that enhance the patient-provider relationship and support compassionate care.

- Affordable AI Tools: Development and distribution of low-cost AI-driven healthcare tools for underserved communities.

- Transparent AI Models: Tools that provide clear explanations of AI-driven decisions, improving transparency and accountability.

- Ethical AI Governance: Frameworks and guidelines for the ethical use of AI in healthcare.

- Data Privacy Protections: Advanced security measures to protect patient data used in AI systems.

- Regulatory Compliance Support: Resources to help healthcare providers and tech companies navigate AI regulations.

- Educational Programs: Training for healthcare professionals on the ethical implications of AI in healthcare.

- Patient Trust Initiatives: Programs to build patient trust in AI-driven healthcare tools through transparency and education.

- Workforce Transition Support: Initiatives to support healthcare workers in adapting to AI-driven changes in their roles.

Target Users and Stakeholders

- User: AI Developers, Healthcare Providers, and Ethical Governance Bodies

- Age Group: 30-60 years

- Gender: M/F

- Usage Pattern: Regular usage for developing, implementing, and governing AI in healthcare

- Benefit: Enhanced fairness, transparency, and human-centricity in AI-driven healthcare

- Stakeholders:

- Healthcare Providers: Hospitals, clinics, and healthcare networks integrating AI tools into patient care

- Technology Companies: Developers of AI-driven healthcare solutions and diagnostics

- Regulatory Bodies: Organizations responsible for ensuring the ethical use of AI in healthcare

- Patients: Individuals receiving care from AI-assisted healthcare systems

- Academic Institutions: Universities and research centers studying the ethical implications of AI in healthcare

Key Competition

- Google Health: Developing AI-driven healthcare tools with a focus on ethical AI integration.

- IBM Watson Health: Provides AI solutions for healthcare, with efforts to ensure fairness and transparency.

- Philips Healthcare: Focuses on AI-driven diagnostics while addressing ethical concerns in AI usage.

- Tempus: Leverages AI to personalize cancer treatment, with attention to ethical AI implementation.

- Babylon Health: Uses AI for telemedicine and diagnostics, with a focus on democratizing access to healthcare.

Products/Services

- Google Health AI Tools: AI-driven diagnostics and healthcare tools with a focus on ethical integration.

- IBM Watson Health AI: Comprehensive AI solutions for healthcare, prioritizing fairness and transparency.

- Philips AI Diagnostics: AI-powered diagnostics with safeguards against bias and ethical concerns.

- Tempus AI for Cancer: AI solutions for personalized cancer treatment with ethical AI considerations.

- Babylon AI Telemedicine: AI-driven telemedicine tools aimed at expanding access to healthcare.

Active Startups

- PathAI: Develops AI-driven pathology solutions with a focus on ethical AI practices.

- Bay Labs: Combines AI with echocardiography to improve heart disease diagnosis while addressing ethical AI concerns.

- Arterys: Provides AI-powered medical imaging solutions with attention to fairness and transparency.

- Zebra Medical Vision: Uses AI for radiology diagnostics, with a commitment to ethical AI development.

- Viz.ai: Focuses on AI-driven stroke detection, ensuring ethical use and patient safety.

- Qure.ai: Develops AI solutions for radiology, with a focus on addressing bias in AI algorithms.

- Aidence: Provides AI for lung cancer detection, with ethical considerations in AI implementation.

- Aidoc: Uses AI to detect acute abnormalities in medical imaging, with an emphasis on ethical AI use.

- Owkin: Focuses on AI-driven drug discovery with a commitment to ethical data use and fairness.

- Ezra: Offers AI-powered MRI scanning for early cancer detection, with attention to ethical implications.

Ongoing Work in Related Areas

- Bias Mitigation Research: Developing techniques to detect and correct bias in AI healthcare algorithms.

- Human-AI Collaboration: Studying the interaction between AI tools and healthcare providers to enhance patient care.

- Ethical AI Frameworks: Creating guidelines and frameworks for the responsible use of AI in healthcare.

- AI Accessibility Initiatives: Promoting the development of affordable AI-driven healthcare tools for all populations.

- Patient Trust Building: Implementing strategies to build trust in AI technologies among patients and healthcare providers.

Recent Investment

- PathAI: $75M in Series B funding led by General Catalyst, April 2019.

- Arterys: $30M in Series C funding led by Temasek Holdings, December 2018.

- Qure.ai: $16M in Series A funding led by Sequoia India, January 2020.

- Viz.ai: $50M in Series B funding led by Greenoaks, March 2019.

- Aidoc: $27M in Series B funding led by Square Peg Capital, April 2019.

Market Maturity

The market for AI-driven healthcare solutions is rapidly maturing, with significant advancements in diagnostics, treatment planning, and patient care. Companies like Google Health, IBM Watson Health, and Philips Healthcare are at the forefront, integrating AI into various aspects of healthcare while addressing ethical challenges. Startups such as PathAI, Arterys, and Viz.ai are innovating in niche areas like medical imaging and pathology, with a strong emphasis on ethical AI practices. Significant investments in bias mitigation, human-AI collaboration, and ethical AI frameworks are shaping the future of healthcare, ensuring that AI technologies enhance rather than compromise the quality and fairness of medical services. As the market continues to evolve, we expect to see more integrated and ethically responsible AI solutions that prioritize patient outcomes and health equity.

Summary

The integration of artificial intelligence (AI) in healthcare brings forth ethical challenges, including bias in AI algorithms, the risk of dehumanizing patient care, and the potential for unequal access to AI-driven healthcare tools. These issues can compromise the quality and fairness of medical services, especially if not addressed proactively. Our proposed platform leverages bias detection tools, human-centric AI integration, affordable AI solutions, and ethical governance frameworks to address these challenges. Key pain points include bias in AI algorithms, dehumanizing patient care, unequal access, transparency and accountability, trust in AI, data privacy, ethical governance, regulatory challenges, algorithmic accountability, and impact on the healthcare workforce.

Target users include AI developers, healthcare providers, and ethical governance bodies, with stakeholders encompassing healthcare providers, technology companies, regulatory bodies, patients, and academic institutions. Key competitors like Google Health, IBM Watson Health, Philips Healthcare, Tempus, and Babylon Health offer various AI-driven healthcare solutions, while startups such as PathAI, Bay Labs, and Arterys are driving innovation in this space. Recent investments highlight significant interest and growth potential in platforms addressing the ethical challenges of AI in healthcare.

By addressing these challenges and leveraging advanced technologies, our platform aims to create a fair, transparent, and human-centric AI-driven healthcare ecosystem that improves patient outcomes and maintains trust in healthcare systems.